Make .local domains NOT slow in macOS

January 29, 2018

19 comments Linux, MacOSX

Problem

I used to have a bunch of domains in /etc/hosts like peterbecom.dev for testing Nginx configurations locally. But then it became impossible to test local sites in Chrome because an .dev is force redirected to HTTPS. No problem, so I use .local instead. However, DNS resolution was horribly slow. For example:

▶ time curl -I http://peterbecom.local/about/minimal.css > /dev/null

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

0 1763 0 0 0 0 0 0 --:--:-- 0:00:05 --:--:-- 0

curl -I http://peterbecom.local/about/minimal.css > /dev/null 0.01s user 0.01s system 0% cpu 5.585 total

5.6 seconds to open a local file in Nginx.

Solution

Here's that one weird trick to solve it: Add an entry for IPv4 AND IPv6 in /etc/hosts.

So now I have:

▶ cat /etc/hosts | grep peterbecom

127.0.0.1 peterbecom.local

::1 peterbecom.local

Verification

Ah! Much better. Thing are fast again:

▶ time curl -I http://peterbecom.local/about/minimal.css > /dev/null

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

0 1763 0 0 0 0 0 0 --:--:-- --:--:-- --:--:-- 0

curl -I http://peterbecom.local/about/minimal.css > /dev/null 0.01s user 0.01s system 37% cpu 0.041 total

0.04 seconds instead of 5.6.

When Docker is too slow, use your host

January 11, 2018

3 comments Web development, Django, MacOSX, Docker

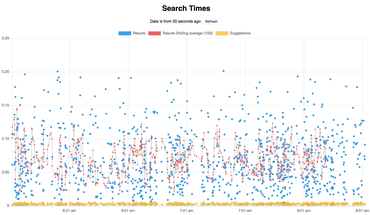

I have a side-project that is basically a React frontend, a Django API server and a Node universal React renderer. The killer feature is its Elasticsearch database that searches almost 2.5M large texts and 200K named objects. All the data is stored in a PostgreSQL and there's some Python code that copies that stuff over to Elasticsearch for indexing.

The PostgreSQL database is about 10GB and the Elasticsearch (version 6.1.0) indices are about 6GB. It's moderately big and even though individual searches take, on average ~75ms (in production) it's hefty. At least for a side-project.

On my MacBook Pro, laptop I use Docker to do development. Docker makes it really easy to run one command that starts memcached, Django, a AWS Product API Node app, create-react-app for the search and a separate create-react-app for the stats web app.

At first I tried to also run PostgreSQL and Elasticsearch in Docker too, but after many attempts I had to just give up. It was too slow. Elasticsearch would keep crashing even though I extended my memory in Docker to 4GB.

This very blog (www.peterbe.com) has a similar stack. Redis, PostgreSQL, Elasticsearch all running in Docker. It works great. One single docker-compose up web starts everything I need. But when it comes to much larger databases, I found my macOS host to be much more performant.

So the dark side of this is that I have remember to do more things when starting work on this project. My PostgreSQL was installed with Homebrew and is always running on my laptop. For Elasticsearch I have to open a dedicated terminal and go to a specific location to start the Elasticsearch for this project (e.g. make start-elasticsearch).

The way I do this is that I have this in my Django projects settings.py:

import dj_database_url

from decouple import config

DATABASES = {

'default': config(

'DATABASE_URL',

# Hostname 'docker.for.mac.host.internal' assumes

# you have at least Docker 17.12.

# For older versions of Docker use 'docker.for.mac.localhost'

default='postgresql://peterbe@docker.for.mac.host.internal/songsearch',

cast=dj_database_url.parse

)

}

ES_HOSTS = config('ES_HOSTS', default='docker.for.mac.host.internal:9200', cast=Csv())

(Actually, in reality the defaults in the settings.py code is localhost and I use docker-compose.yml environment variables to override this, but the point is hopefully still there.)

And that's basically it. Now I get Docker to do what various virtualenvs and terminal scripts used to do but the performance of running the big databases on the host.

How to rotate a video on OSX with ffmpeg

January 3, 2018

5 comments Linux, MacOSX

Every now and then, I take a video with my iPhone and even though I hold the camera in landscape mode, the video gets recorded in portrait mode. Probably because it somehow started in portrait and didn't notice that I rotated the phone.

So I'm stuck with a 90° video. Here's how I rotate it:

ffmpeg -i thatvideo.mov -vf "transpose=2" ~/Desktop/thatvideo.mov

then I check that ~/Desktop/thatvideo.mov looks like it should.

I can't remember where I got this command originally but I've been relying on my bash history for a looong time so it's best to write this down.

The "transpose=2" means 90° counter clockwise. "transpose=1" means 90° clockwise.

What is ffmpeg??

If you're here because you Googled it and you don't know what ffmpeg is, it's a command line program where you can "programmatically" do almost anything to videos such as conversion between formats, put text in, chop and trim videos. To install it, install Homebrew then type:

brew install ffmpeg

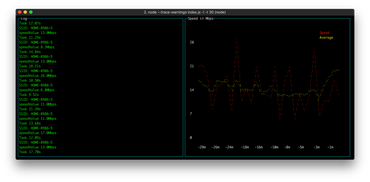

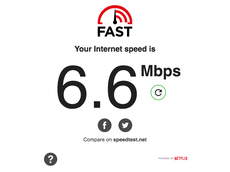

How's My WiFi?

December 8, 2017

2 comments MacOSX, JavaScript, Node

This was one of those late-evening-after-the-kids-are-asleep project. Followed by some next-morning-sober-readme-fixes-and-npmjs-paperwork.

It's a little Node script that will open https://fast.com with puppeteer, and record, using document.querySelector('#speed-value') what my current Internet speed is according to that app. It currently only works on OSX but it should be easy to fix for someone handy on Linux or Windows.

You can either run it just once and get a readout. That's basically as useful as opening fast.com in a new browser tab.

The other way is to run it in a loop howsmywifi --loop and sit and watch as it tries to figure out what your Internet speed is after multiple measurements.

That's it!

The whole point of this was for me to get an understanding of what my Internet speed is and if I'm being screwed by Comcast. The measurements are very erratic and they might sporadically depend on channel noise on the WiFi or just packet crowding when other devices is overcrowding the pipes with heavy downloads such as video chatting or watching movies or whatever.

And Screenshots!

As a bonus, it will take a screenshot (if you pass the --screenshots flag) of the fast.com page each time it has successfully measured. Not sure what to do with this. If you have ideas, let me know.

Yet another Docker 'A ha!' moment

November 5, 2017

2 comments MacOSX, Docker

tl;dr; To build once and run Docker containers with different files use a volume mount. If that's not an option, like in CircleCI, avoid volume mount and rely on container build every time.

What the heck is a volume mount anyway?

Laugh all you like but after almost year of using Docker I'm still learning the basics. Apparently. This, now, feels laughable but there's a small chance someone else stumbles like I did and they might appreciate this.

If you have a volume mounted for a service in your docker-compose.yml it will basically take whatever you mount and lay that on top of what was in the Docker container. Doing a volume mount into the same working directory as your container is totally common. When you do that the files on the host (the files/directories mounted) get used between each run. If you don't do that, you're stuck with the files, from your host, from the last time you built.

Consider...:

# Dockerfile FROM python:3.6-slim LABEL maintainer="mail@peterbe.com" COPY . /app WORKDIR /app CMD ["python", "run.py"]

and...:

#!/usr/bin/env python

if __name__ == '__main__':

print("hello!")

Let's build it:

$ docker image build -t test:latest . Sending build context to Docker daemon 5.12kB Step 1/5 : FROM python:3.6-slim ---> 0f1dc0ba8e7b Step 2/5 : LABEL maintainer "mail@peterbe.com" ---> Using cache ---> 70cf25f7396c Step 3/5 : COPY . /app ---> 2e95935cbd52 Step 4/5 : WORKDIR /app ---> bc5be932c905 Removing intermediate container a66e27ecaab3 Step 5/5 : CMD python run.py ---> Running in d0cf9c546fee ---> ad930ce66a45 Removing intermediate container d0cf9c546fee Successfully built ad930ce66a45 Successfully tagged test:latest

And run it:

$ docker container run test:latest hello!

So basically my little run.py got copied into the container by the Dockerfile. Let's change the file:

$ sed -i.bak s/hello/allo/g run.py $ python run.py allo!

But it won't run like that if we run the container again:

$ docker container run test:latest hello!

So, the container is now built based on a Python file from back of the time the container was built. Two options:

1) Rebuild, or

2) Volume mount in the host directory

This is it! That this is your choice.

Rebuild might take time. So, let's mount the current directory from the host:

$ docker container run -v `pwd`:/app test:latest allo!

So yay! Now it runs the container with the latest file from my host directory.

The dark side of volume mounts

So, if it's more convenient to "refresh the files in the container" with a volume mount instead of container rebuild, why not always do it for everything?

For one thing, there might be files built inside the container that cease to be visible if you override that workspace with your own volume mount.

The other crucial thing I learned the hard way (seems to obvious now!) is that there isn't always a host directory to mount. In particular, in tecken we use a base ubuntu image and in the run parts of the CircleCI configuration we were using docker-compose run ... with directives (in the docker-compose.yml file) that uses volume mounts. So, the rather cryptic effect was that the files mounted into the container was not the files checked out from the git branch.

The resolution in this case, was to be explicit when running Docker commands in CircleCI to only do build followed by run without a volume mount. In particular, to us it meant changing from docker-compose run frontend lint to docker-compose run frontend-ci lint. Basically, it's a separate directive in the docker-compose.yml file that is exclusive to CI.

In conclusion

I feel dumb for not seeing this clearly before.

The mistake that triggered me was that when I ran docker-compose run test test (first test is the docker compose directive, the second test is the of the script sent to CMD) it didn't change the outputs when I edit the files in my editor. Adding a volume mount to that directive solved it for me locally on my laptop but didn't work in CircleCI for reasons (I can't remember how it errored).

So now we have this:

# In docker-compose.yml

frontend:

build:

context: .

dockerfile: Dockerfile.frontend

environment:

- NODE_ENV=development

ports:

- "3000:3000"

- "35729:35729"

volumes:

- $PWD/frontend:/app

command: start

# Same as 'frontend' but no volumes or command

frontend-ci:

build:

context: .

dockerfile: Dockerfile.frontend"No space left on device" on OSX Docker

October 3, 2017

13 comments Web development, MacOSX, Docker

UPDATE 2020

As Greg Brown pointed out, the new way is:

docker container prune docker image prune

Original blog post...

If you run out of disk space in your Docker containers on OSX, this is probably the best thing to run:

docker rm $(docker ps -q -f 'status=exited') docker rmi $(docker images -q -f "dangling=true")

The Problem

This isn't the first time it's happened so I'm blogging about it to not forget. My postgres image in my docker-compose.yml didn't start and since it's linked its problem is "hidden". Running it in the foreground instead you can see what the problem is:

▶ docker-compose run db The files belonging to this database system will be owned by user "postgres". This user must also own the server process. The database cluster will be initialized with locale "en_US.utf8". The default database encoding has accordingly been set to "UTF8". The default text search configuration will be set to "english". Data page checksums are disabled. fixing permissions on existing directory /var/lib/postgresql/data ... ok initdb: could not create directory "/var/lib/postgresql/data/pg_xlog": No space left on device initdb: removing contents of data directory "/var/lib/postgresql/data"

Docker on OSX

I admit that I have so much to learn about Docker and the learning is slow. Docker is amazing but I think I'm slow to learn because I'm just not that interested as long as it works and I can work on my apps.

It seems to me that there's a cap of all storage of all Docker containers in one big file in OSX. It's capped to 64GB:

▶ cd ~/Library/Containers/com.docker.docker/Data/com.docker.driver.amd64-linux/ com.docker.docker/Data/com.docker.driver.amd64-linux ▶ ls -lh Docker.qcow2 -rw-r--r--@ 1 peterbe staff 63G Oct 3 08:51 Docker.qcow2

If you run the above mentioned commands (docker rm ...) this file does not shrink but space is freed up. Just like how MongoDB (used to) allocates much more disk space than it actually uses.

If you delete that Docker.qcow2 and restart Docker the space problem goes away but then the problem is that you lose all your active containers which is especially annoying if you have useful data in database containers.