I have a side-project that is basically a React frontend, a Django API server and a Node universal React renderer. The killer feature is its Elasticsearch database that searches almost 2.5M large texts and 200K named objects. All the data is stored in a PostgreSQL and there's some Python code that copies that stuff over to Elasticsearch for indexing.

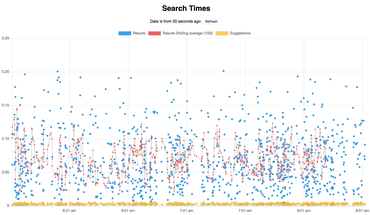

The PostgreSQL database is about 10GB and the Elasticsearch (version 6.1.0) indices are about 6GB. It's moderately big and even though individual searches take, on average ~75ms (in production) it's hefty. At least for a side-project.

On my MacBook Pro, laptop I use Docker to do development. Docker makes it really easy to run one command that starts memcached, Django, a AWS Product API Node app, create-react-app for the search and a separate create-react-app for the stats web app.

At first I tried to also run PostgreSQL and Elasticsearch in Docker too, but after many attempts I had to just give up. It was too slow. Elasticsearch would keep crashing even though I extended my memory in Docker to 4GB.

This very blog (www.peterbe.com) has a similar stack. Redis, PostgreSQL, Elasticsearch all running in Docker. It works great. One single docker-compose up web starts everything I need. But when it comes to much larger databases, I found my macOS host to be much more performant.

So the dark side of this is that I have remember to do more things when starting work on this project. My PostgreSQL was installed with Homebrew and is always running on my laptop. For Elasticsearch I have to open a dedicated terminal and go to a specific location to start the Elasticsearch for this project (e.g. make start-elasticsearch).

The way I do this is that I have this in my Django projects settings.py:

import dj_database_url

from decouple import config

DATABASES = {

'default': config(

'DATABASE_URL',

# Hostname 'docker.for.mac.host.internal' assumes

# you have at least Docker 17.12.

# For older versions of Docker use 'docker.for.mac.localhost'

default='postgresql://peterbe@docker.for.mac.host.internal/songsearch',

cast=dj_database_url.parse

)

}

ES_HOSTS = config('ES_HOSTS', default='docker.for.mac.host.internal:9200', cast=Csv())

(Actually, in reality the defaults in the settings.py code is localhost and I use docker-compose.yml environment variables to override this, but the point is hopefully still there.)

And that's basically it. Now I get Docker to do what various virtualenvs and terminal scripts used to do but the performance of running the big databases on the host.

Comments

Great article !

Do you always make the same observation and conclusion today ?

For your blog, the docker-compose file doesn't exist. Why ? Have you get the same problem between 2018 and 2020 ?

So, your django project is running in docker container, while postgresql and elastic search are running on host machine.

I have a stack (php, apache, postgresql) with the same problem about database performance.

About my problem, database volume is small but contains sql views which use much cpu&memory&...

I tried with shm_size in docker-compose.yml. It's the same problem.

it's frustating not having the possibility to a stack 100% containerized, but i must be pragmatic.

Thanks in advance.

I don't use docker unless I absolutely have to. It's horrible in many ways, for development, but has many advantages too sometimes. (by the way, I think foreman/honcho is almost always better than docker-compose)

The golden solution is to be able to be flexible. And I think that's what this blog post is about. You give people the ability to do development simply by running `git clone` and `docker-compose up` but as soon as you need to do things that suck in Docker, it should be possible.

So I think your README should say: "Use this docker-compose to get started. But if you want to test benchmarking (or something that pushes the limits of Docker), follow the following instructions to leverage your host..."

The other incredibly important tip/recommendation is to make your software to NOT be dependent on specific versions. If you don't do weird things with your SQL database or whatever it is, it makes it much easier to leverage your host. For example, write your software so it works with Postgres 10, 11, 13, etc.

Hello Peter,

thank for your answers.

i didn't know foreman/honcho. Interesting.

Yes, to be able to be flexible !

I'm agree with your recommandation.

however :

- stack 100% containerized : possible for slight web application and website (CMS, blog, ...) -> Are you ok ?

- i'm agree : application must work with postgresql 10,11,13. The advantage of docker is to leverage a latest version of postgresql and have also multiples versions (but you know every advantage of docker, i know ;))