How to encrypt a file with Emacs on macOS (ccrypt)

January 29, 2019

0 comments MacOSX, Linux

Suppose you have a cleartext file that you want to encrypt with a password, here's how you do that with ccrypt on macOS. First:

▶ brew install ccrypt

Now, you have the ccrypt program. Let's test it:

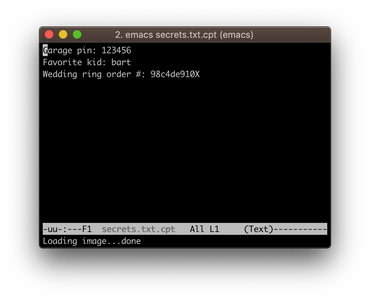

▶ cat secrets.txt

Garage pin: 123456

Favorite kid: bart

Wedding ring order no: 98c4de910X

▶ ccrypt secrets.txt

Enter encryption key: ▉▉▉▉▉▉▉▉▉▉▉

Enter encryption key: (repeat) ▉▉▉▉▉▉▉▉▉▉▉

# Note that the original 'secrets.txt' is replaced

# with the '.cpt' version.

▶ ls | grep secrets

secrets.txt.cpt

▶ less secrets.txt.cpt

"secrets.txt.cpt" may be a binary file. See it anyway?

There. Now you can back up that file on Dropbox or whatever and not have to worry about anybody being able to open it without your password. To read it again:

▶ ccrypt --decrypt --cat secrets.txt.cpt

Enter decryption key: ▉▉▉▉▉▉▉▉▉▉▉

Garage pin: 123456

Favorite kid: bart

Wedding ring order no: 98c4de910X

▶ ls | grep secrets

secrets.txt.cpt

Or, to edit it you can do these steps:

▶ ccrypt --decrypt secrets.txt.cpt

Enter decryption key: ▉▉▉▉▉▉▉▉▉▉▉

▶ vi secrets.txt

▶ ccrypt secrets.txt

Enter encryption key:

Enter encryption key: (repeat)

Clunky that you have you extract the file and remember to encrypt it back again. That's where you can use emacs. Assuming you have emacs already installed and you have a ~/.emacs file. Add these lines to your ~/.emacs:

(setq auto-mode-alist

(append '(("\\.cpt$" . sensitive-mode))

auto-mode-alist))

(add-hook 'sensitive-mode (lambda () (auto-save-mode nil)))

(setq load-path (cons "/usr/local/share/emacs/site-lisp/ccrypt" load-path))

(require 'ps-ccrypt "ps-ccrypt.el")

By the way, how did I know that the load path should be /usr/local/share/emacs/site-lisp/ccrypt? I looked at the output from brew:

▶ brew info ccrypt

ccrypt: stable 1.11 (bottled)

Encrypt and decrypt files and streams

...

==> Caveats

Emacs Lisp files have been installed to:

/usr/local/share/emacs/site-lisp/ccrypt

...

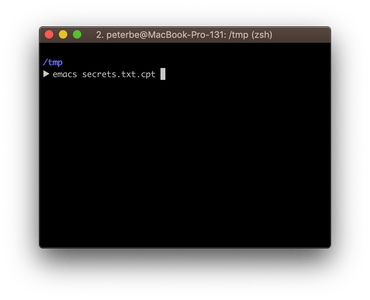

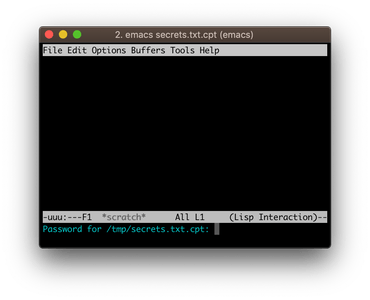

Anyway, now I can use emacs to open the secrets.txt.cpt file and it will automatically handle the password stuff:

This is really convenient. Now you can open an encrypted file, type in your password, and it will take care of encrypting it for you when you're done (saving the file).

Be warned! I'm not an expert at either emacs or encryption so just be careful and if you get nervous take precaution and set aside more time to study this deeper.

elapsed function in bash to print how long things take

December 12, 2018

0 comments MacOSX, Linux

I needed this for a project and it has served me pretty well. Let's jump right into it:

# This is elapsed.sh

SECONDS=0

function elapsed()

{

local T=$SECONDS

local D=$((T/60/60/24))

local H=$((T/60/60%24))

local M=$((T/60%60))

local S=$((T%60))

(( $D > 0 )) && printf '%d days ' $D

(( $H > 0 )) && printf '%d hours ' $H

(( $M > 0 )) && printf '%d minutes ' $M

(( $D > 0 || $H > 0 || $M > 0 )) && printf 'and '

printf '%d seconds\n' $S

}

And here's how you use it:

# Assume elapsed.sh to be in the current working directory

source elapsed.sh

echo "Doing some stuff..."

# Imagine it does something slow that

# takes about 3 seconds to complete.

sleep 3

elapsed

echo "Some quick stuff..."

sleep 1

elapsed

echo "Doing some slow stuff..."

sleep 61

elapsed

The output of running that is:

Doing some stuff... 3 seconds Some quick stuff... 4 seconds Doing some slow stuff... 1 minutes and 5 seconds

Basically, if you have a bash script that does a bunch of slow things, it having a like of elapsed there after some blocks of code will print out how long the script has been running.

It's not beautiful but it works.

How I performance test PostgreSQL locally on macOS

December 10, 2018

2 comments Web development, MacOSX, PostgreSQL

It's weird to do performance analysis of a database you run on your laptop. When testing some app, your local instance probably has 1/1000 the amount of realistic data compared to a production server. Or, you're running a bunch of end-to-end integration tests whose PostgreSQL performance doesn't make sense to measure.

Anyway, if you are doing some performance testing of an app that uses PostgreSQL one great tool to use is pghero. I use it for my side-projects and it gives me such nice insights into slow queries that I'm willing to live with the cost that it is to run it on a production database.

This is more of a brain dump of how I run it locally:

First, you need to edit your postgresql.conf. Even if you used Homebrew to install it, it's not clear where the right config file is. Start psql (on any database) and type this to find out which file is the one:

$ psql kintobench

kintobench=# show config_file;

config_file

-----------------------------------------

/usr/local/var/postgres/postgresql.conf

(1 row)

Now, open /usr/local/var/postgres/postgresql.conf and add the following lines:

# Peterbe: From Pghero's configuration help. shared_preload_libraries = 'pg_stat_statements' pg_stat_statements.track = all

Now, to restart the server use:

▶ brew services restart postgresql

Stopping `postgresql`... (might take a while)

==> Successfully stopped `postgresql` (label: homebrew.mxcl.postgresql)

==> Successfully started `postgresql` (label: homebrew.mxcl.postgresql)

The next thing you need is pghero itself and it's easy to run in docker. So to start, you need Docker for mac installed. You also need to know the database URL. Here's how I ran it:

docker run -ti -e DATABASE_URL=postgres://peterbe:@host.docker.internal:5432/kintobench -p 8080:8080 ankane/pghero

Note the trick of peterbe:@host.docker.internal because I don't use a password but inside the Docker container it doesn't know my terminal username. And the host.docker.internal is so the Docker container can reach the PostgreSQL installed on the host.

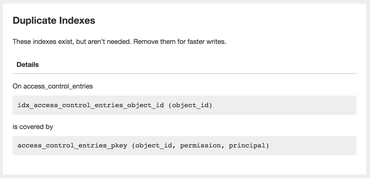

Once that starts up you can go to http://localhost:8080 in a browser and see a listing of all the cumulatively slowest queries. There are other cool features in pghero too that you can immediately benefit from such as hints about unused/redundent database indices.

Hope it helps!

The best grep tool in the world; ripgrep

June 19, 2018

3 comments Linux, Web development, MacOSX

tl;dr; ripgrep (aka. rg) is the best tool to grep today.

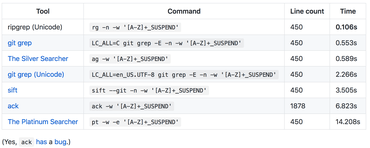

ripgrep is a tool for searching files. Its killer feature is that it's fast. Like, really really fast. Faster than sift, git grep, ack, regular grep etc.

If you don't believe me, either read this detailed blog post from its author or just jump straight to the conclusion:

-

For both searching single files and huge directories of files, no other tool obviously stands above ripgrep in either performance or correctness.

-

ripgrep is the only tool with proper Unicode support that doesn’t make you pay dearly for it.

-

Tools that search many files at once are generally slower if they use memory maps, not faster.

I used to use git grep whenever I was inside a git repo and sift for everything else. That alone, was a huge step up from regular grep. Granted, almost all my git repos are small enough that regular git grep is faster than I can perceive many times. But with ripgrep I can just add --no-ignore-vcs and it searches in all the files mentioned in .gitignore too. That's useful when you want to search in your own source as well as the files in node_modules.

The installation instructions are easy. I installed it with brew install ripgrep and the best way to learn how to use it is rg --help. Remember that it has a lot of cool features that are well worth learning. It's written in Rust and so far I haven't had a single crash, ever. The ability to search by file type gets some getting used to (tip! use: rg --type-list) and remember that you can pipe rg output to another rg. For example, to search for all lines that contain query and string you can use rg query | rg string.

How to unset aliases set by Oh My Zsh

June 14, 2018

4 comments Linux, MacOSX

I use Oh My Zsh and I highly recommend it. However, it sets some aliases that I don't want. In particular, there's a plugin called git.plugin.zsh (located in ~/.oh-my-zsh/plugins/git/git.plugin.zsh) that interfers with a global binary I have in $PATH. So when I start a shell the executable gg becomes...:

▶ which gg

gg: aliased to git gui citool

That overrides /usr/local/bin/gg which is the one I want to execute when I type gg. To unset that I can run...:

▶ unset gg

▶ which gg

/usr/local/bin/gg

To override it "permanently", I added, to the end of ~/.zshrc:

# This unsets ~/.oh-my-zsh/plugins/git/git.plugin.zsh

# So my /usr/local/bin/gg works instead

unalias gg

Now whenever I start a new terminal, it defaults to the gg in /usr/local/bin/gg instead.

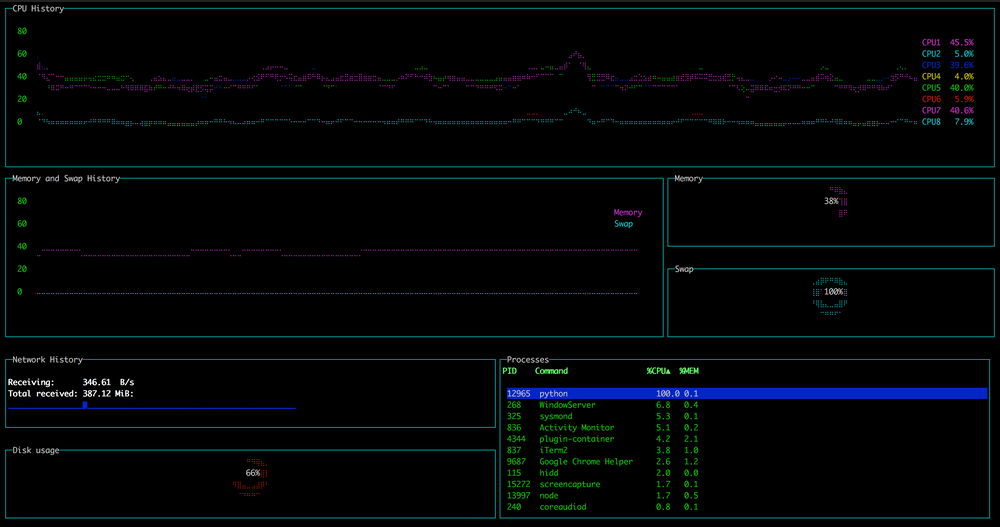

gtop is best

May 2, 2018

0 comments Linux, MacOSX, JavaScript

To me, using top inside a Linux server via SSH is all muscle-memory and it's definitely good enough. On my Macbook when working on some long-running code that is resource intensive the best tool I know of is: gtop

I like it because it has the graphs I want and need. It splits up the work of each CPU which is awesome. That's useful for understanding how well a program is able to leverage more than one CPU process.

And it's really nice to have the list of Processes there to be able to quickly compare which programs are running and how that might affect the use of the CPUs.

Instead of listing alternatives I've tried before, hopefully this Reddit discussion has good links to other alternatives