Find the largest node_modules directories with bash

September 30, 2022

0 comments Bash, MacOSX, Linux

tl;dr; fd -I -t d node_modules | rg -v 'node_modules/(\w|@)' | xargs du -sh | sort -hr

It's very possible that there's a tool that does this, but if so please enlighten me.

The objective is to find which of all your various projects' node_modules directory is eating up the most disk space.

The challenge is that often you have nested node_modules within and they shouldn't be included.

The command uses fd which comes from brew install fd and it's a fast alternative to the built-in find. Definitely worth investing in if you like to live fast on the command line.

The other important command here is rg which comes from brew install ripgrep and is a fast alternative to built-in grep. Sure, I think one can use find and grep but that can be left as an exercise to the reader.

▶ fd -I -t d node_modules | rg -v 'node_modules/(\w|@)' | xargs du -sh | sort -hr 1.1G ./GROCER/groce/node_modules/ 1.0G ./SHOULDWATCH/youshouldwatch/node_modules/ 826M ./PETERBECOM/django-peterbecom/adminui/node_modules/ 679M ./JAVASCRIPT/wmr/node_modules/ 546M ./WORKON/workon-fire/node_modules/ 539M ./PETERBECOM/chiveproxy/node_modules/ 506M ./JAVASCRIPT/minimalcss-website/node_modules/ 491M ./WORKON/workon/node_modules/ 457M ./JAVASCRIPT/battleshits/node_modules/ 445M ./GITHUB/DOCS/docs-internal/node_modules/ 431M ./GITHUB/DOCS/docs/node_modules/ 418M ./PETERBECOM/preact-cli-peterbecom/node_modules/ 418M ./PETERBECOM/django-peterbecom/adminui0/node_modules/ 399M ./GITHUB/THEHUB/thehub/node_modules/ ...

How it works:

fd -I -t d node_modules: Find all directories callednode_modulesbut ignore any.gitignoredirectives in their parent directories.rg -v 'node_modules/(\w|@)': Exclude all finds where the wordnode_modules/is followed by a@or a[a-z0-9]character.xargs du -sh: For each line, rundu -shon it. That's like doingcd some/directory && du -sh, wheredumeans "disk usage" and-smeans total and-hmeans human-readable.sort -hr: Sort by the first column as a "human numeric sort" meaning it understands that "1M" is more than "20K"

Now, if I want to free up some disk space, I can look through the list and if I recognize a project I almost never work on any more, I just send it to rm -fr.

Comparing compression commands with hyperfine

July 6, 2022

0 comments Bash, MacOSX, Linux

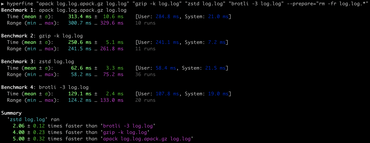

Today I stumbled across a neat CLI for benchmark comparing CLIs for speed: hyperfine. By David @sharkdp Peter.

It's a great tool in your arsenal for quick benchmarks in the terminal.

It's written in Rust and is easily installed with brew install hyperfine. For example, let's compare a couple of different commands for compressing a file into a new compressed file. I know it's comparing apples and oranges but it's just an example:

It basically executes the following commands over and over and then compares how long each one took on average:

apack log.log.apack.gz log.loggzip -k log.logzstd log.logbrotli -3 log.log

If you're curious about the ~results~ apples vs oranges, the final result is:

▶ ls -lSh log.log* -rw-r--r-- 1 peterbe staff 25M Jul 3 10:39 log.log -rw-r--r-- 1 peterbe staff 2.4M Jul 5 22:00 log.log.apack.gz -rw-r--r-- 1 peterbe staff 2.4M Jul 3 10:39 log.log.gz -rw-r--r-- 1 peterbe staff 2.2M Jul 3 10:39 log.log.zst -rw-r--r-- 1 peterbe staff 2.1M Jul 3 10:39 log.log.br

The point is that you type hyperfine followed by each command in quotation marks. The --prepare is run for each command and you can also use --cleanup="{cleanup command here}.

It's versatile so it doesn't have to be different commands but it can be: hyperfine "python optimization1.py" "python optimization2.py" to compare to Python scripts.

🎵 You can also export the output to a Markdown file. Here, I used:

▶ hyperfine "apack log.log.apack.gz log.log" "gzip -k log.log" "zstd log.log" "brotli -3 log.log" --prepare="rm -fr log.log.*" --export-markdown log.compress.md ▶ cat log.compress.md | pbcopy

and it becomes this:

| Command | Mean [ms] | Min [ms] | Max [ms] | Relative |

|---|---|---|---|---|

apack log.log.apack.gz log.log |

291.9 ± 7.2 | 283.8 | 304.1 | 4.90 ± 0.19 |

gzip -k log.log |

240.4 ± 7.3 | 232.2 | 256.5 | 4.03 ± 0.18 |

zstd log.log |

59.6 ± 1.8 | 55.8 | 65.5 | 1.00 |

brotli -3 log.log |

122.8 ± 4.1 | 117.3 | 132.4 | 2.06 ± 0.09 |

Umlauts (non-ascii characters) with git on macOS

March 22, 2021

0 comments Python, MacOSX

I edit a file called files/en-us/glossary/bézier_curve/index.html and then type git status and I get this:

▶ git status

...

Changes not staged for commit:

...

modified: "files/en-us/glossary/b\303\251zier_curve/index.html"

...

What's that?! First of all, I actually had this wrapped in a Python script that uses GitPython to analyze the output of for change in repo.index.diff(None):. So I got...

FileNotFoundError: [Errno 2] No such file or directory: '"files/en-us/glossary/b\\303\\251zier_curve/index.html"'

What's that?!

At first, I thought it was something wrong with how I use GitPython and thought I could force some sort of conversion to UTF-8 with Python. That, and to strip the quotation parts with something like path = path[1:-1] if path.startwith('"') else path

After much googling and experimentation, what totally solved all my problems was to run:

▶ git config --global core.quotePath false

Now you get...:

▶ git status

...

Changes not staged for commit:

...

modified: files/en-us/glossary/bézier_curve/index.html

...

And that also means it works perfectly fine with any GitPython code that does something with the repo.index.diff(None) or repo.index.diff(repo.head.commit).

Also, we I use the git-diff-action GitHub Action which would fail to spot files that contained umlauts but now I run this:

steps:

- uses: actions/checkout@v2

+

+ - name: Config git core.quotePath

+ run: git config --global core.quotePath false

+

- uses: technote-space/get-diff-action@v4.0.6

id: git_diff_content

with:

Build pyenv Python versions on macOS Catalina 10.15

February 19, 2020

9 comments Python, MacOSX

UPDATE Mar 7, 2022: For OSX 12.2 Monterey

Here's what I needed to do in 2022 to get this to work:

SDKROOT=/Applications/Xcode.app/Contents/Developer/Platforms/MacOSX.platform/Developer/SDKs/MacOSX12.1.sdk \ MACOSX_DEPLOYMENT_TARGET=12.2 \ PYTHON_CONFIGURE_OPTS="--enable-framework" \ pyenv install 3.10.2

BELOW IS ORIGINAL BLOG POST

I'm still working on getting pyenv in my bloodstream. It seems like totally the right tool for having different versions of Python available on macOS that don't suddenly break when you run brew upgrade periodically. But every thing I tried failed with an error similar to this:

python-build: use openssl from homebrew

python-build: use readline from homebrew

Installing Python-3.7.0...

python-build: use readline from homebrew

BUILD FAILED (OS X 10.15.x using python-build 20XXXXXX)

Inspect or clean up the working tree at /var/folders/mw/0ddksqyn4x18lbwftnc5dg0w0000gn/T/python-build.20190528163135.60751

Results logged to /var/folders/mw/0ddksqyn4x18lbwftnc5dg0w0000gn/T/python-build.20190528163135.60751.log

Last 10 log lines:

./Modules/posixmodule.c:5924:9: warning: this function declaration is not a prototype [-Wstrict-prototypes]

if (openpty(&master_fd, &slave_fd, NULL, NULL, NULL) != 0)

^

./Modules/posixmodule.c:6018:11: error: implicit declaration of function 'forkpty' is invalid in C99 [-Werror,-Wimplicit-function-declaration]

pid = forkpty(&master_fd, NULL, NULL, NULL);

^

./Modules/posixmodule.c:6018:11: warning: this function declaration is not a prototype [-Wstrict-prototypes]

2 warnings and 2 errors generated.

make: *** [Modules/posixmodule.o] Error 1

make: *** Waiting for unfinished jobs....

I read through the Troubleshooting FAQ and the "Common build problems" documentation. xcode was up to date and I had all the related brew packages upgraded. Nothing seemed to work.

Until I saw this comment on an open pyenv issue: "Unable to install any Python version on MacOS"

All I had to do was replace the 10.14 for 10.15 and now it finally worked here on Catalina 10.15. So, the magical line was this:

SDKROOT=/Applications/Xcode.app/Contents/Developer/Platforms/MacOSX.platform/Developer/SDKs/MacOSX10.15.sdk \ MACOSX_DEPLOYMENT_TARGET=10.15 \ PYTHON_CONFIGURE_OPTS="--enable-framework" \ pyenv install -v 3.7.6

Hopefully, by blogging about it you'll find this from Googling and I'll remember the next time I need it because it did eat 2 hours of precious evening coding time.

"ld: library not found for -lssl" trying to install mysqlclient in Python on macOS

February 5, 2020

1 comment Python, MacOSX

I don't know how many times I've encountered this but by blogging about it, hopefully, next time it'll help me, and you!, find this sooner.

If you get this:

clang -bundle -undefined dynamic_lookup -L/usr/local/opt/readline/lib -L/usr/local/opt/readline/lib -L/Users/peterbe/.pyenv/versions/3.8.0/lib -L/opt/boxen/homebrew/lib -L/usr/local/opt/readline/lib -L/usr/local/opt/readline/lib -L/Users/peterbe/.pyenv/versions/3.8.0/lib -L/opt/boxen/homebrew/lib -L/opt/boxen/homebrew/lib -I/opt/boxen/homebrew/include build/temp.macosx-10.14-x86_64-3.8/MySQLdb/_mysql.o -L/usr/local/Cellar/mysql/8.0.18_1/lib -lmysqlclient -lssl -lcrypto -o build/lib.macosx-10.14-x86_64-3.8/MySQLdb/_mysql.cpython-38-darwin.so

ld: library not found for -lssl

clang: error: linker command failed with exit code 1 (use -v to see invocation)

error: command 'clang' failed with exit status 1

(The most important line is the ld: library not found for -lssl)

On most macOS systems, when trying to install a Python package that requires a binary compile step based on the system openssl (which I think comes from the OS), you'll get this.

The solution is simple, run this first:

export LDFLAGS="-L/usr/local/opt/openssl/lib"

export CPPFLAGS="-I/usr/local/opt/openssl/include"

Depending on your install of things, you might need to adjust this accordingly. For me, I have:

▶ ls -l /usr/local/opt/openssl/

total 1272

-rw-r--r-- 1 peterbe staff 717 Sep 10 09:13 AUTHORS

-rw-r--r-- 1 peterbe staff 582924 Dec 19 11:32 CHANGES

-rw-r--r-- 1 peterbe staff 743 Dec 19 11:32 INSTALL_RECEIPT.json

-rw-r--r-- 1 peterbe staff 6121 Sep 10 09:13 LICENSE

-rw-r--r-- 1 peterbe staff 42183 Sep 10 09:13 NEWS

-rw-r--r-- 1 peterbe staff 3158 Sep 10 09:13 README

drwxr-xr-x 4 peterbe staff 128 Dec 19 11:32 bin

drwxr-xr-x 3 peterbe staff 96 Sep 10 09:13 include

drwxr-xr-x 10 peterbe staff 320 Sep 10 09:13 lib

drwxr-xr-x 4 peterbe staff 128 Sep 10 09:13 share

Now, with those things set you should hopefully be able to do things like:

pip install mysqlclient

Experimenting with Nginx worker_processes

February 14, 2019

0 comments Web development, Nginx, MacOSX, Linux

I have Nginx 1.15.8 installed with Homebrew on my macOS. By default the /usr/local/etc/nginx/nginx.conf it set to...:

worker_processes 1;

But, from the documentation, it says:

"The optimal value depends on many factors including (but not limited to) the number of CPU cores, the number of hard disk drives that store data, and load pattern. When one is in doubt, setting it to the number of available CPU cores would be a good start (the value “auto” will try to autodetect it)." (bold emphasis mine)

What is the ideal number for me? The performance of Nginx on my laptop doesn't really matter. But for my side-projects it's important to have a fast Nginx since it serves static HTML and lots of static assets. However, on my personal servers I have a bunch of other resource hungry stuff going on that I know is more likely to need the resources, like Elasticsearch and uwsgi.

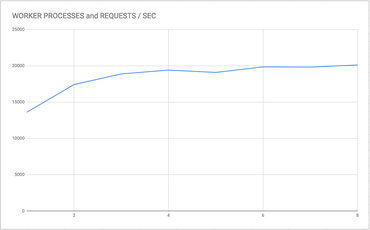

To figure this out, I wrote a benchmark program that requested a small index.html about 10,000 times across 10 concurrent clients with hey.

hey -n 10000 -c 10 http://peterbecom.local/plog/variable_cache_control/awspa

I ran this 10 times between changing the worker_processes in the nginx.conf file. Here's the output:

1 WORKER PROCESSES BEST : 13,607.24 reqs/s 2 WORKER PROCESSES BEST : 17,422.76 reqs/s 3 WORKER PROCESSES BEST : 18,886.60 reqs/s 4 WORKER PROCESSES BEST : 19,417.35 reqs/s 5 WORKER PROCESSES BEST : 19,094.18 reqs/s 6 WORKER PROCESSES BEST : 19,855.32 reqs/s 7 WORKER PROCESSES BEST : 19,824.86 reqs/s 8 WORKER PROCESSES BEST : 20,118.25 reqs/s

Or, as a graph:

Now note, this is done here on my MacBook Pro. Not on my Ubuntu DigitalOcean servers. For now, I just want to get a feeling for how these numbers correlate.

Conclusion

The benchmark isn't good enough. The numbers are pretty stable but I'm doing this on my laptop with multiple browsers idling, Slack, and Spotify running. Clearly, the throughput goes up a bit when you allocate more workers but if anything can be learned from this, start with going beyond 1 for a quick fix and from there start poking and more exhaustive benchmarks. And don't forget, if you have time to go deeper on this, to look at the combination of worker_connections and worker_processes.