uwsgi is the latest and greatest WSGI server and promising to be the fastest possible way to run Nginx + Django. Proof here But! Is it that simple? Especially if you're involving Django herself.

So I set out to benchmark good old threaded fcgi and gunicorn and then with a source compiled nginx with the uwsgi module baked in I also benchmarked uwsgi. The first mistake I did was testing a Django view that was using sessions and other crap. I profiled the view to make sure it wouldn't be the bottleneck as it appeared to take only 0.02 seconds each. However, with fcgi, gunicorn and uwsgi I kept being stuck on about 50 requests per second. Why? 1/0.02 = 50.0!!! Clearly the slowness of the Django view was thee bottleneck (for the curious, what took all of 0.02 was the need to create new session keys and putting them into the database).

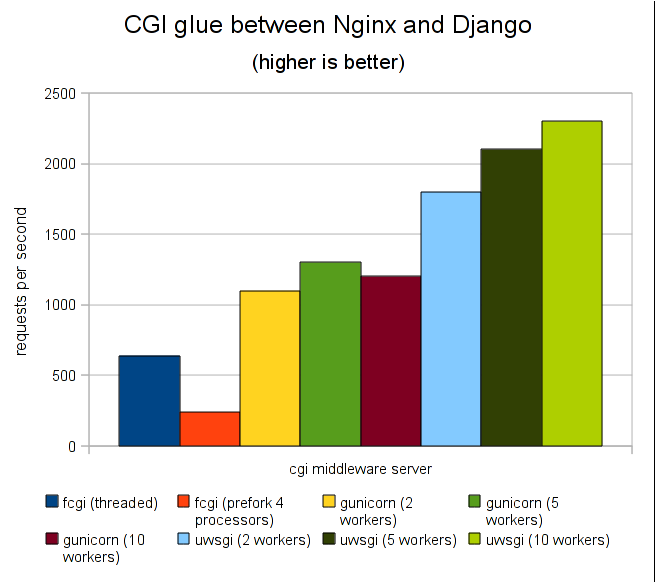

So I wrote a really dumb Django view with no sessions middleware enabled. Now we're getting some interesting numbers:

fcgi (threaded) 640 r/s

fcgi (prefork 4 processors) 240 r/s (*)

gunicorn (2 workers) 1100 r/s

gunicorn (5 workers) 1300 r/s

gunicorn (10 workers) 1200 r/s (?!?)

uwsgi (2 workers) 1800 r/s

uwsgi (5 workers) 2100 r/s

uwsgi (10 workers) 2300 r/s

(* this made my computer exceptionally sluggish as CPU when through the roof) If you're wondering why the numbers appear to be rounded it's because I ran the benchmark multiple times and guesstimated an average (also obviously excluded the first run).

If you're wondering why the numbers appear to be rounded it's because I ran the benchmark multiple times and guesstimated an average (also obviously excluded the first run).

Misc notes

- For gunicorn it didn't change the numbers if I used a TCP (e.g. 127.0.0.1:9000) or a UNIX socket (e.g. /tmp/wsgi.sock)

- On the upstream directive in nginx it didn't impact the benchmark to set

fail_timeout=0or not. - fcgi on my laptop was unable to fork new processors automatically in this test so it stayed as 1 single process! Why?!!

- when you get more than 2,000 requests/second the benchmark itself and the computer you run it on becomes wobbly. I managed to get 3,400 requests/second out of uwsgi but then the benchmark started failing requests.

- These tests were done on an old 32bit dual core Thinkpad with 2Gb RAM :(

- uwsgi was a bitch to configure. Most importantly, who the hell compiles source code these days when packages are so much much more convenient? (Fry-IT hosts around 100 web servers that need patching and love)

- Why would anybody want to use sockets when they can cause permission problems? TCP is so much more straight forward.

- changing the number of ulimits to 2048 did not improve my results on this computer

- gunicorn is not available as a Debian package :(

- Adding too many workers can actually damage your performance. See example of 10 workers on gunicorn.

- I did not bother with mod_wsgi since I don't want to go near Apache and to be honest last time I tried I got really mysterious errors from mod_wsgi that I ran away screaming.

Conclusion

gunicorn is the winner in my eyes. It's easy to configure and get up and running and certainly fast enough and I don't have to worry about stray threads being created willy nilly like threaded fcgi. uwsgi definitely worth coming back to the day I need to squeeze few more requests per second but right now it just feels to inconvenient as I can't convince my sys admins to maintain compiled versions of nginx for the little extra benefit.

Having said that, the day uwsgi becomes available as a Debian package I'm all over it like a dog on an ass-flavored cookie.

And the "killer benefit" with gunicorn is that I can predict the memory usage. I found, on my laptop: 1 worker = 23Mb, 5 workers = 82Mb, 10 workers = 155Mb and these numbers stayed like that very predictably which means I can decide quite accurately how much RAM I should let Django (ab)use.

UPDATE:

Since this was publish we, in my company, have changed all Djangos to run over uWSGI. It's proven faster than any alternatives and extremely stable. We actually started using it before it was merged into core Nginx but considering how important this is and how many sites we have it's not been a problem to run our own Nginx package.

Hail uWSGI!

Voila! Now feel free to flame away about the inaccuracies and what multitude of more wheels and knobs I could/should twist to get even more juice out.

Comments

Post your own commentLatest version of gunicorn can use gevent for providing fast asynchronous networking. Please reconfigure gunicorn and show us updated benchmark. I am almost sure gunicorn + gevent combination would be the best.

Cool! I'll see what I can do. It's a bit unfair to spend too much time tuning since that could be said about all approaches and then I'll end up spending too much time on this. My test was basic by choice I and I'm aware that that's not entirely fair either.

By the way, I'm not doing any long polling requests in this basic view. Why should I use Gevent?

uwsgi can run with gevent too look http://projects.unbit.it/uwsgi/wiki/Gevent

some comments:

- it's not uwsgi fault to be in source code; it's an nginx architecture limitation. (hopefully someday it would grow a sane module loading architecture)

- the best number is almost 9.6 better than the worst, but that doesn't mean you can get almost 10x performance in real world. 240 reqs/sec is 4.1ms per request, while 2300 reqs/sec is 0.43ms/req. let's say it's 4ms less per request. But your initial test (with a more like real Django page) was 50reqs/sec or 20ms/req. take 4ms off this time and you get 16ms/req, or 62.5req/sec, just 25% improvement. and that's over a patological case. IOW: better optimize the app (with good DB queries and good caches), and forget about the server.

Interesting about the module loading architecture in nginx. Didn't know it was Nginx being awkward. For all its awesome performance it does have its warts.

For certain pages, where the app server does a lot you're screwed by that as the bottleneck. But if you have very light views, e.g a simple cachable json view it will make sense to make sure you have the best server to get an extra free boost and more responsive and user friendly web page.

if it's caheable, put it in memcached and nginx can fetch it directly, without calling the app. in fact, you could even write to a ephemeral file; nginx is just as fast on the filesystem as memcached on RAM

Much of stuff here on peterbe.com is done that way. I benchmarked the hell out of that too and found it much much faster than Varnish and Varnish was much faster than Squid. It's a bit clunky to invalidate but when it works it works wonders.

As an example, I had this resource (/plog/importance-of-public-urls/display-thumbnail/ecs.png

) in Varnish first.

Just ran some benchmarks on it and got a heft 3,500 requests/second. Put it in front of Nginx and re-ran at 7,000 requests/second. That's pretty cool!

Some consider the module system of nginx to be a strength.

Dynamic loading would be a decent middle ground, but there are benefits to making modules be determined at compile time and inside nginx. Namely, you get a smaller binary with less extraneous code paths that you can EASILY distribute to N servers (because the modules are in the binary).

Hi,

Thank you for this bench. Just a little correction, gunicorn is available as debian package in launchpad :

https://launchpad.net/~bchesneau/+archive/gunicorn

Maybe it could be used for debian too ? Let me know

I installed it with pip and that was very easy. The fact that it's not in a "proper" debian repo just means it's not very mature yet. Not your fault and nothing you can do. Time will solve that problem.

Debian Developer here. I was looking into packaging uwsgi but the rather unfriendly nginx module loading would make it quite an intensive thing to keep up to date.

Thanks for sharing the results! It seems to reaffirm the results obtained in my benchmarks, which is a good thing ;)

I agree that uWSGI can be somewhat difficult to setup and I also did not see any great performance differences between TCP sockets and UNIX sockets.

Where you not able to predict the memory usage of the uWSGI setup as well? I did not really experiment with multiple workers but I noticed it to be on the low side.

Regarding memory usage when using uWSGI I was unfortunately not able to measure it. The tools I used didn't make it easy enough. I did look at some outputs but didn't write it down but from what I can remember it barely moved even at high number of workers. I thought it was my fault but perhaps it was that memory efficient.

Thanks for the test. But if all of the servers produce only 50 requests/second on a real-world example (I would think most if not all apps use sessions), why should we care about this performance? And why are sessions behaving as if they were running single-threaded?

When the Django view is really slow the which server you use doesn't really matter. In reality an app has both heavy and light views. For the light ones why waste time with slow and crappy servers if you don't have to?

Also, getting it right means that if the traffic is high you get much better memory consumption on the server.

"Real world" apps don't use DB for sessions they use the cache backend -> memcached. Also "Real world" apps cache aggressively so most views aren't hitting the "slow" app/db.

I think the one I'm using is a hybrid of DB and memcached. Haven't looked into why it can't be 100% memcache.

Thanks for dong the benchmark.

- When you said 5 workers for gunicorn or uwsgi, was that 5 processes or 5 threads in a single process?

- if it takes 0.02 sec to create a single session, shouldn't it be able to process more than 50 req per sec since sessions would be created concurrently. The only way that the 50 req/sec becomes the limit is if everything is being serialized in a single thread. Yet that appears to be what happened. Can you shed some light on that?

Thanks.

With gunicorn, yes, 5 workers = 5 processes but internally I'm not sure what it's actually called. Unlike threads these things start immediately. I.e. they are spawned straight away. Perhaps on an operating system level they are threads.

I don't know why the Postgres wasn't able to create and commit rows in concurrency.

UWSGI is a pain to configure, and there are some interesting failures when using UNIX sockets at high concurrency levels (try it with ab -n 10000 -c 255 against nginx/django/uwsgi to see what I mean) caused by some unix socket retry issues, however, overall it's performance is very good. TCP is almost exactly as fast, so is worth using instead.

If you REALLY want to tune Django though, take a look at some lower hanging fruit; the templates are HORRIFIC. Jinja2 is not exactly a drop in replacement, but it is close, and it is much faster. I have a test with a simple UL>LI list with 300 li elements, which renders at 150 pages per second in django templates, and over 1000 pages/sec on Jinja2, same middleware and input data.

And I agree with the multiple posters saying that uWSGI is a pain to set up. It is also a pain to run correctly, as it's documentation , especially about command line options , is painful. Super powerful, but infoglut trying to figure out what to do and why.

Sorry Derek, but this comparation is very old. Now the uwsgi module is included in nginx by default (as Cherokee) and a lot of documentation has been rewritten, with a section dedicated to examples contributed by users.

http://projects.unbit.it/uwsgi/wiki/Example

During the last 6 months the project has evolved a lot. Yes it is not simple to configure as a SIMPLE wsgi-only server, but there is a lot of works going on on this area, performance are increasing after every release (yes there are still space for optimizations) and memory usage is lower

than every competitor. Documentation is surely not of high quality (contributions are wellcomed), but in the mailing-list we are always ready to help people.

Yeah, you got "hackernewsed" today, so I didn't notice the old dates.

The uwsgi module in Nginx wasn't what I was discussing though, I still think that actually deploying a uWSGI container, although easy for simple cases, is still a bit complicated to do for heavy traffic sites, or on UNIX sockets.

This is sad to hear, as uWSGI is mainly targeted for ultra-heavy sites :)

Probably you have faced the low defaults value (that you can obviously increase/tune). For me tuning is the main component of a heavy loaded site (because it is machine-dependent) and sorry, if you have got problem with UNIX socket you should have post something in the nginx or uWSGI list because it is the first time i have heard of it and for sure someone could help you pointing to the right direction. By the way i do not want to become annoying, Open Source is about choice and uWSGI would never be developed so fast without good competition.

We have now been running several sites on uWSGI. None of these sites have high traffic but there are many sites so the low maintenance due to the stunning stability has proven very productive for us. Keep up the good work!

PS. Note that I've added an update to this original blog post.

"Why would anybody want to use sockets when they can cause permission problems? TCP is so much more straight forward."

I only use unix sockets with uWSGI and it is very straightforward, never had any problems. It is very convenient to use named sockets instead of juggling numbers if you are using multiple uwsgi process for multiple sites on the same server.

You shouldn't even have to use CHOWN on or create the unix socket file yourself. Just specify the socket name and add "-C" or "<chmod-socket>666</chmod-socket>" to your uWSGI config.

Thanks for the tip!

verey good

Both projects (uWSGI and GUnicorn) have passed a long way since the time when this article was written. I want to contribute with one little finding.

- These tests were done on an old 32bit dual core Thinkpad with 2Gb RAM

- Adding too many workers can actually damage your performance. See example of 10 workers on gunicorn.

In recent documentation I've found the explanation for gunicorn 10 workers performance fall.

- Generally we recommend (2 x $num_cores) + 1 as the number of workers to start off with.

read more at http://gunicorn.org/design.html#how-many-workers